[Or “my quest for the ultimate home-brew storage array.”] At my day job, we use a variety of storage solutions based on the type of data we’re hosting. Over the last year, we have started to deploy SuperMicro-based hardware with OpenSolaris and ZFS for storage of some classes of data. The systems we have built previously have not had any strict performance requirements, and were built with SuperMicro’s SC846E2 chassis, which supports 24 total SAS/SATA drives, with an integrated SAS expander in the backplane to support multipath to SAS drives. We’re building out a new system that we hope to be able to promote to tier-1 for some “less critical data”, so we wanted better drive density and more performance. We landed on the relatively new SuperMicro SC847 chassis, which supports 36 total 3.5″ drives (24 front and 12 rear) in a 4U enclosure. While researching this product, I didn’t find many reviews and detailed pictures of the chassis, so figured I’d take some pictures while building the system and post them for the benefit of anyone else interested in such a solution.

Updates:

[2010-05-19 Some observations on power consumption appended to the bottom of the post.]

[2010-05-20 Updated notes a bit to clarify that I am not doing multilane or SAS – thanks for reminding me to clarify that Mike.]

[2011-12-20 Replacing references of ‘port multiplier’ with ‘SAS Expander’ to reflect the actual technology in use.. thanks commenters Erik and Aaron for reminding me that port multiplier is not a generic term, and sorry it took me so long to fix the terminology!]

In the systems we’ve built so far, we’ve only deployed SATA drives since OpenSolaris can still get us decent performance with SSD for read and write cache. This means that in the 4U cases we’ve used with integrated SAS expanders, we have only used one of the two SFF-8087 connectors on the backplane; this works fine, but limits the total throughput of all drives in the system to 4 3gbit/s channels (on this chassis, 6 drives would be on each 3gbit channel.) On our most recent build, we built it with the intention of using it both for “nearline”-class storage, and as a test platform to see if we can get the performance we need to store VM images. As part of this decision, we decided to go with a backplane that supports full throughput to each drive. We also decided to use SATA drives for the storage disks, versus 7200rpm SAS drives (which would support multilane, but with the backplane we’re using it doesn’t matter), or faster SAS disks (as the SSD caches should give us all the speed we need.) For redundancy, our plan is to use replication between appliances versus running multi-head stacked to the same storage shelves; for an example of a multi-head/multi-shelf setup, see this build by the local geek Mike Horwath of ipHouse.

When purchasing a SuperMicro chassis with a SAS backplane, there are a few things you should be aware of..

[ad name=”Google Adsense 728×90″]

- There are different models of the chassis that include different style backplanes:

- ‘A’ style (IE – SC847A) – This chassis includes backplanes that allow direct access to each drive (no SAS expander) via SFF-8087 connectors. In the SC847 case, the front backplane has 6 SFF-8087 connectors, and the rear backplane has 3 SFF-8087 connectors. This allows full bandwidth to every drive, and minimizes the number of cables as much as possible. Downside, of course, is that you need enough controllers to provide 9 SFF-8087 connectors!

- ‘TQ’ style – not available for the SC847 cases, but in the SC846 chassis an example part number would be ‘SC846TQ‘. This backplane provides an individual SATA connector for each drive — in other words, you will need 24 SATA cables, and 24 SATA ports to connect them to. This will be a bit of a mess cable-wise.. with the SFF-8087 option, I don’t know why anyone would still be interested in this – if you have a reason, please comment! This is quite a common option on the 2U chassis – it can actually be difficult to purchase a 2U barebones “SuperServer” that includes SFF-8087 connectors.

- ‘E1’ style (IE – SC847E1) – This chassis includes backplanes with integrated 3gbit/s SAS expander, without multipath support. Each backplane has one SFF-8087 connector, so you only need two SFF-8087 ports in a SC847E1 system. The downside is that you are limited to 3gbit/s per channel – so you’d have a total of 6 drives on each 3gbit/s channel for the front backplane, and 3 drives on each channel for the rear backplane. SuperMicro also has a ‘E16’ option (IE – SC847E16) which is upcoming, and supports SATA3/SAS2, for a total of 6gbit/s per channel.

- ‘E2’ style (IE – SC847E2) – Similar to the SC847E1, this includes a SAS expander on the backplane, but also supports multipath for SAS drives. Each backplane has two SFF-8087 connectors. Same caveats as the E1 apply. They also have a ‘E26’ version coming out soon (IE – SC847E26) which will include SAS2 (6gbit/s) expanders.

- You can also choose the type of expansion slots you would like to support on the motherboard tray; you will need to match the tray to the type of motherboard that you purchase. Note that these are the same options available on their 2U chassis – the concept of the SC847 chassis essentially makes your motherboard choices the same as the 2U systems.

- ‘UB’ option (IE, SC847A-R1400UB) – this option supports SuperMicro’s proprietary UIO expansion cards. It uses a proprietary riser card to mount the cards horizontally, and will support 4 full-height cards and 3 low-profile cards in the SC847. They get the card density by mounting the components for one (or more) UIO cards on the opposite site of the PCB than you usually see – the connector itself is still PCI-E x8, but the bracket and components are all on the opposite side. I have not ordered a chassis that uses UIO recently, so I’m not sure if the sample part number would include riser cards or not. Note that you will need to purchase a SuperMicro board that supports UIO for this chassis.

- ‘LPB’ option (IE, SC847A-R1400LPB) – this option supports 7 low-profile expansion slots. If you do not have any need for full-height cards, this gives you the maximum number of high-speed slots. This is the option you will need to go with if you want to use a motherboard from a vendor other than SuperMicro.

I do wish that SuperMicro would offer a “best of both worlds” option – it would be great to be able to get a high amount of bandwidth to each drive, and also support multipath. Maybe something like a SAS2 backplane which only put two or three drives on each channel instead of six drives? If they did two drives per channel with a SAS expander, and supported multipath, it should be possible to get the same amount of total bandwidth to each drive (assuming active/active multipath), and still keep a reasonable number of total SFF-8087 connectors, plus support multipath with SAS drives, and get the bonus of controller redundancy. If anyone knows of an alternate vendor or of plans at SuperMicro to offer this, by all means, comment!

For the system I’m building, we went with the following components:

- SuperMicro SC847A-R1400LPB chassis – 36-bay chassis with backplanes that offer direct access to each drive via SFF-8087 connectors. 7 low-profile expansion slots on the motherboard tray.

- SuperMicro X8DTH-6F motherboard – Intel 5520 chipset; supports Intel’s 5500- and 5600- series Xeon CPUs. Has an integrated LSI 2008 SAS2 controller, which supports 8 channels via two SFF-8087 ports. 7 PCI-E 2.0 x8 slots. 12 total memory slots. IPMI with KVMoIP integrated. Two Gig-E network ports based on Intel’s newest 82576 chipset. This board is great.. but what would make it perfect for me would be a version of the board that had 18 memory slots and 4 integrated Gig-E ports instead of two. Ah well, can’t have it all!

- 2x Intel E5620 Westmere processors

- 24gb DDR3 memory; PC3-10600, registered/ecc.

- 4x LSI 9211-8i PCI-E SAS-2 HBA – 2 SFF-8087 ports on each controller; same chipset (LSI 2008) as the onboard controllers. This gives me a total of 10 SFF-8087 SAS2 ports, which is one more than needed to supports all the drive bays. I should also note that we haven’t had any problems with the LSI2008-based controllers dropping offline with timeouts under OpenSolaris; with our other systems, we started with LSI 3081E-R controllers, and had no end of systems failing due to bug ID 6894775 in OpenSolaris, which as far as I’m able to tell has not yet been resolved. Swapping the controllers out with 9211-8i’s solved all the issues we were having.

- Variety of SuperMicro and 3ware SFF-8087 cables in various lengths to reach the ports on the backplanes from the controller locations.

- 2x Seagate 750gb SATA hard drives for boot disks.

- 18x Hitachi 2TB SATA hard drives for data disks.

- 2x Intel 32gb X25-E SATA-2 SSD’s; used in ZFS for a mirrored Zero Intent Log (ZIL); write cache. (Note: 2.5″ drives; needs a SuperMicro MCP-220-00043-0N adapter to mount in the hot-swap bays.)

- 1x Crucial RealSSD C300 128gb SSD

; used in ZFS for a L2ARC read cache. (Also a 2.5″ drive; see note above.)

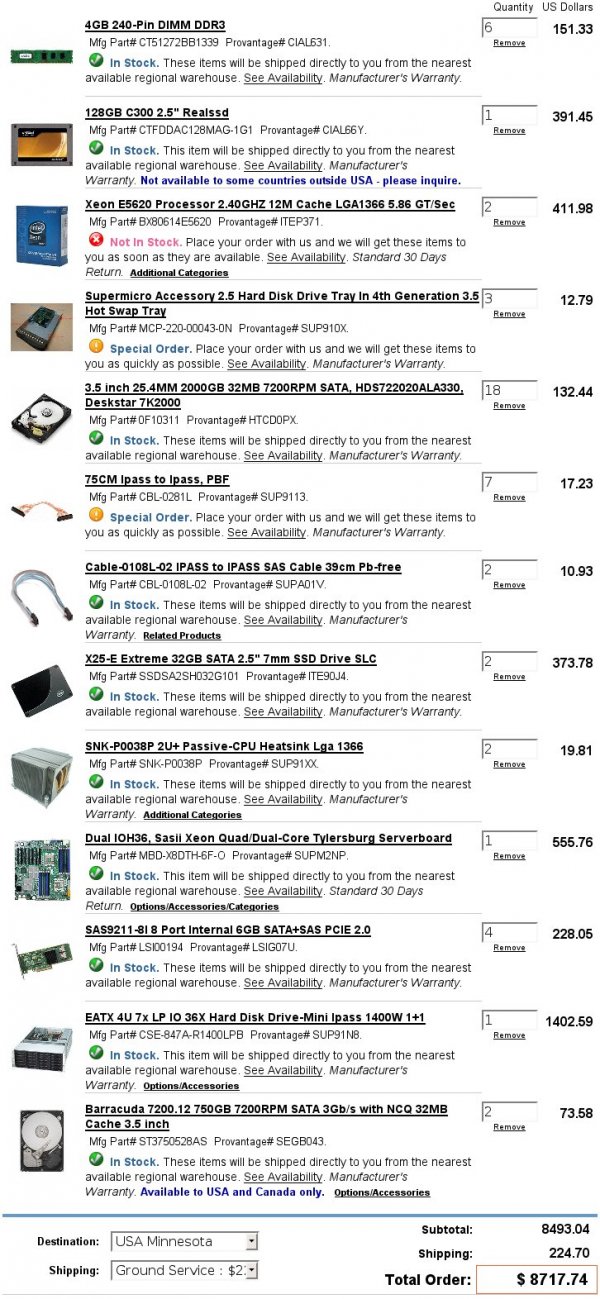

We purchased the system from CDW, with our own customer-specific pricing. I’m not allowed to share what we paid, but for your reference, I’ve whipped up a shopping cart at Provantage with (essentially) the same components. There is no special pricing here; this is just the pricing that their web site listed as of May 8 2010 at 11:18am central time. Note: I have no affiliation with Provantage. I have ordered from them previously, and enjoyed their service, but cannot guarantee you will have a good experience there. The prices here may or may not be valid if you go to order. You may be able to get better pricing by talking to a customer service rep there. I also had to change a few components for parts that Provantage did not have available – namely some of the various lengths of SFF-8087 cables. I error’d on the side of ‘long’, so it should work, but I haven’t built a system with those exact cables, so can’t guarantee anything.

As you can see, the total price for this system came out at just under $8500, or $8717.14 shipped. Not bad at all for a high-performance storage array with 18 2tb data drives and the ability to add 13 more.

If we do decide that this is the route to go for our VM image storage, the config would be similar to above, with the following changes at minimum:

- More memory (probably 48gb) using 8gb modules to leave room for more expansion without having to replace modules.

- Switch from desktop HDDs to enterprise or nearline HDDs (6gb SAS if they are economical); probably also go with lower capacity drives, as our VMs would not require the same amount of total storage, and NexentaStor is priced by the terabyte of raw storage.

- Add more (either 4x or 6x total, still used in pairs of 2) X25-E’s for ZIL/SLOG, possibly also go with 64gb instead of 32gb. (More total drives should mean more total throughput for synchronous writes. If Seagate Pulsars are available, also consider those.

- Add additional RealSSD C300’s for cache drives; the more the better.

- Add additional network capacity in the form of PCI-E NIC cards – either 2x 4-port Gig-E or 2x 10-GigE. This will allow us to make better use of IPMP and LACP to both distribute our network load among our core switches and use more than 2gbit total bandwidth.

In any case, on to some pictures of the chassis and build.

Chassis in shipping box – includes good quality rackmount rails and the expected box of screws, power cables, etc. First SuperMicro chassis I’ve ordered that is palletized.

Front of the chassis – 24 drive bays up front.

Rear of the chassis – 12 drive bays, and a tray for the motherboard above them. Also shows the air shroud to direct airflow over the CPUs; the only part of the chassis that feels cheap at all.. but it serves its purpose just fine.

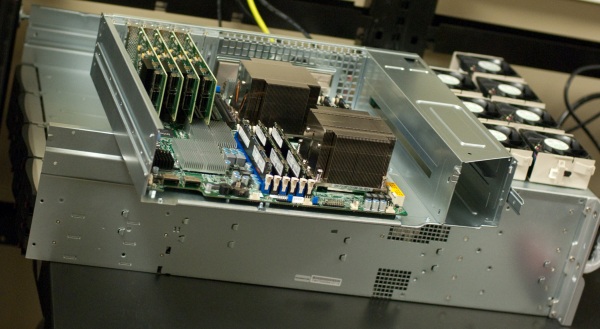

System with the motherboard tray removed. Note that as far as the mounting is concern the tray is pretty much the same as a standard SuperMicro 2U system. You’ll need to order heatsinks, cards, etc that would work in a 2U.

View of the system from the back with the motherboard and four front fans removed. You can see a bit of the front backplane in the upper right; two of the SFF-8087 connectors are visible. All cable routing goes underneath the fans; there is plenty of room under the motherboard for cable slack. You can also see the connectors that the power supplies slide into on the upper left hand corner, and a pile of extra power cables that are unneeded for my configuration underneath that.

Another shot of the front backplane. You can see the five of the six SFF-8087 connectors (the other is on the right-hand side of the backplane which is not visible.) Also note the fans that I’ve removed to get better access to the backplane.

One of the power connectors that the fans slide into (white four-pin connector near the center of the picture); the SFF-8087 connector that is not visible in the picture above is highlighted in red.

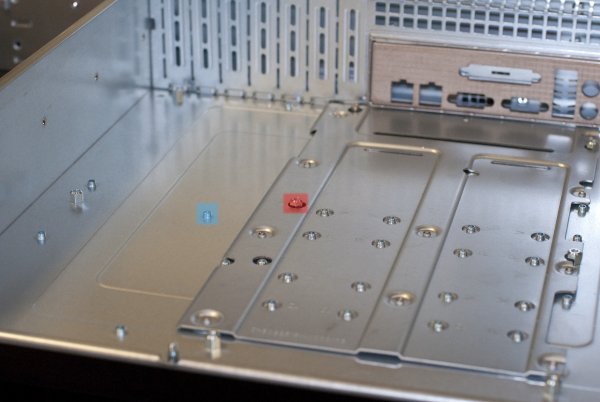

Motherboard tray before installing the motherboard. This tray uses a different style screw system than I’ve seen before; instead of having threaded holes that you screw standoffs into, they have standoffs coming up off the bottom (one highlighted in blue), which you screw an adapter onto (highlighted in red) which the motherboard rests on and is secured to.

A partial view of the rear backplane on the system; also the bundle of extra power cables and the ribbon cable connected to the front panel.

Labels on one of the power supplies. This system includes a pair of ‘PWS-1K41P-1R’ power supplies, which output 1400W at 220V or 1100W at 120V.

Motherboard installed on tray, with the four LSI SAS HBAs in their boxes.

One of the two Intel E5620 ‘Westmere’ Xeon processors set in motherboard but not secured yet.

Both processors and 24gb of memory installed. No heatsinks yet.

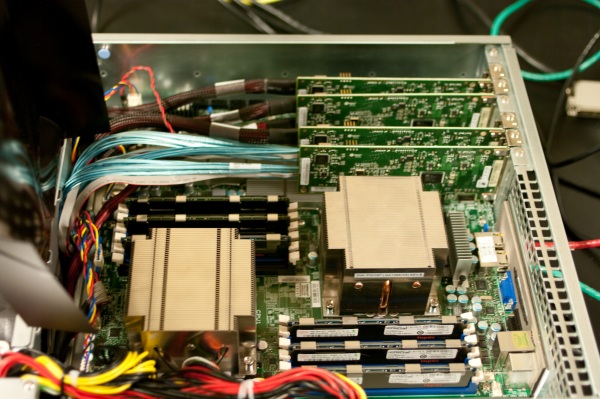

Motherboard tray complete and ready to be installed in the system. Heatsinks and LSI controllers have been installed. Note the two SFF-8087 connectors integrated on the motherboard, and eight more on the four controllers.

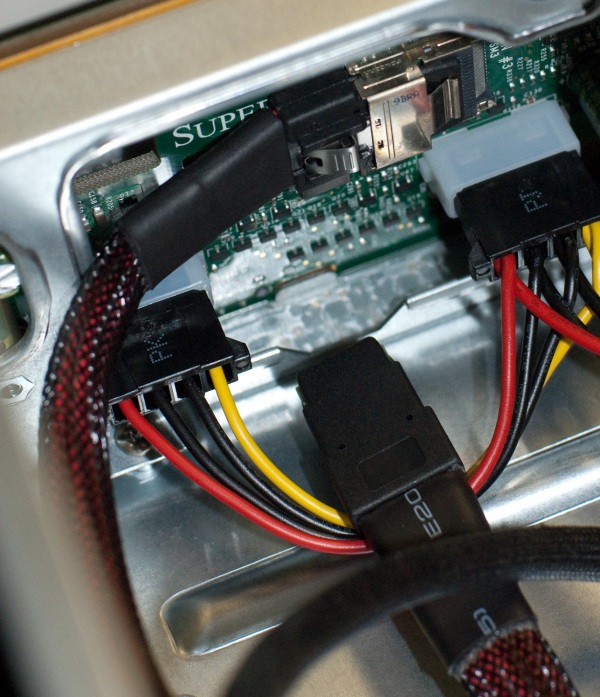

Prep work on the rear backplane; the chassis shipped with the power cables pre-wired; I connected the SFF-8087 cable.

Motherboard tray installed back in the system; SFF-8087 cables connected to three of the four LSI controllers. I ended up moving one controller over for ease of cabling – notice the gap in the middle of the four controllers.

(Note: The pictures of the finished system below this point were taken on 5/7/2010; thanks to my coworker Colleen for letting me borrow her camera since I #natefail‘d to bring mine!)

The seven cooling fans to keep this system running nice and cool.

HBAs with all cables connected.

Finished system build with the top off. One power supply is slightly pulled out since I only have a single power cable plugged in.. if you have one cable plugged in but both power supplies installed, alas, the alarm buzzer is loud.

Front hard drive lights after system is finished – note that we don’t have every drive bay populated yet.

Rear drive lights while system is running.

[ad name=”Google Adsense 728×90″]

The build-out on the system went fine for the most part; the only problem I ran into is that the motherboard did not have a BIOS installed which supported the relatively new Westmere processors. Fortunately I had a Nehalem E5520 I could borrow from another system to get the BIOS upgraded.. I wish the BIOS recovery procedure would work for unsupported processors, but ah well. I was pleased with the way the motherboard tray slides out; it makes it easy to get the cabling tucked underneath and routed so that they will not interfere with airflow. There also seems to be plenty of airflow to keep the 36 drives cooled.

I currently have NexentaStor 3.0 running on the system; we have not yet landed on what operating system we will run on this long-term.. but it will likely either be NexentaCore or NexentaStor. If we deploy this solution for our VM images (with some upgrades as mentioned above), we will almost certainly use NexentaStor and the VMDC plugin, but we’ll cross that bridge if we get there!

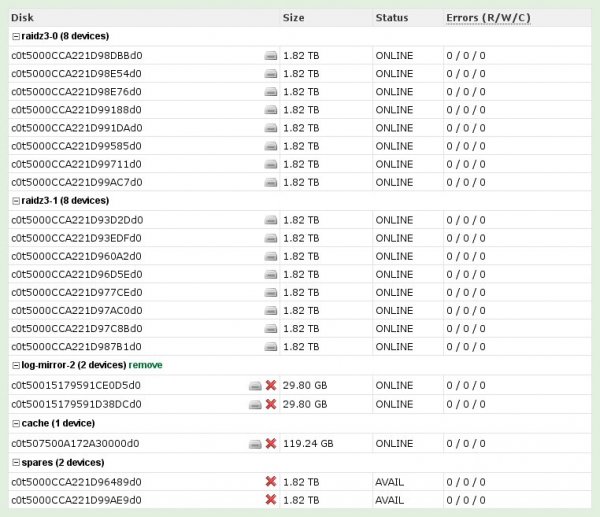

Here’s the disk configuration I have running at the moment with NexentaStor:

- ‘syspool’: Mirrored ZFS zpool with 2x750gb Seagate drives.

- ‘NateVol1’: ZFS zpool with..

- 2 RaidZ3 arrays with 8 2TB disks each

- 2 2TB disks set as spares

- 2 36gb Intel X25-E SSDs as a mirrored log device

- 1 128gb Crucial RealSSD C300 as a cache device

..and the obligatory screenshot of the data volume config:

This nets 18T usable space, and would allow for a simultaneous failure of any three data disks before there is any risk of data loss. (Each of the sub-arrays in ‘NateVol1’ have 3 parity disks – so I could also lose 3 disks from each of the sub-arrays without any issues.)

Again, this system only has two Gig-E NICs at the moment.. I’ve done I/O tests with NFS across one NIC and iSCSI across the other NIC, and can max out the bandwidth on both cards simultaneously with multiple runs of Bonnie++ 1.96 without the system breaking a sweat. I like! I should also note that this is with both deduplication and compression enabled.

Another note – before putting this into production, I did some simple “amp clamp” power usage tests on the box, with one power supply unplugged. The other power supply was plugged into 120V. While idling, it consumed 3.3A, and while running multiple copies of Bonnie in the ZFS storage pool (with all active disks lighting up nicely), it consumed 4.1A. Not bad at all for the amount of disk in this machine! I’d estimate that if the 13 additional drive bays were occupied with 2TB disks, and all those disks were active, the machine would consume about 5.5A – maybe slightly more. When we racked it up at the data center (in one of our legacy racks that is still 120V), the power usage bumped up by 3.2A combined across the A+B power, which matches nicely with my clamped readings. I’m very impressed – under 500 watts while running full out.. wow.

I will update this post once we decide on a final configuration “for real” and put this into production, but so far I’d highly recommend this configuration! If you’ve used the SC847 chassis, I’d love to hear what you’ve thought. I’d also love to try out the 45-bay storage expansion version of this chassis at some point – talk about some dense storage! :)

Great post, Would be interested in seeing the performance when bonding the 4-port Intel Gig-E PCI-E NICs with XenServer or VMWare.

Does NexentaStor support bonding the NIC’s?

Yeah, NexentaStor can use active or passive LACP, or general Solaris IPMP. Assuming that we go this route, we’ll likely do 2 or 4 links to each of our core switches with LACP, and build a IPMP group out of those interfaces (at least I hope that config is supported under Nexenta – haven’t specifically tried creating a IPMP group of two LACP groups in NexentaStor yet.. I know it works in OpenSolaris, so the underlying tech supports it.) We’ll also probably do multiple VLANs to let us use iSCSI multipathing so a single transfer can consume more than 1gbit.

Thanks for the comment! I see you’re also debating on going with a ZFS solution.. best of luck with your choices. ;) If you do end up getting a good enough discount to go with Sun/Oracle’s Unified Storage, *make*sure* that the option you select includes ZIL if you want synchronous writes and need performance!

Is there room below the motherboard tray to mount anything, like say your SSDs if you did not want to use up hot-swap bays for them?

There is a fair amount of room under there, so something could certainly be rigged up. You’d have to be careful not to compromise the cooling system and to make sure that your cabling doesn’t get in the way, but beyond that, I think it could be done!

The chassis does actually also include brackets to mount a few drives internally.. from the manual, page 4-6:

It also specifies that the ‘Tray P/N’ is ‘MCP-220-84701-0N’.. I’d imagine that would be the same tray that they include one of, but am not sure. The description on SuperMicro’s site for that part is “Internal drive bay for one 3.5″ HDD or two 2.5″ HDD”, so it sure sounds like the same tihng. I saw the brackets, but had no interest in mounting anything internally, so didn’t really pay attention to where they would go! It’s possible that there are actually standoffs underneath the motherboard tray.. that would seem like the most logical place. Unfortunately the manual doesn’t provide details on where these would be installed, at least not that I could find.

If you give it a try, please let me know how it goes! ;)

Replying to myself, yeehaw! I took a closer look through my images in Lightroom, and think I see where at least one of the drive mounts is.. take a look at this photo from above; if you look down to the bottom of the chassis from where the middle fan is there are mounting studs on each side, then a few in front of those.. they seem like they would be the right size.

Hey,

Awesome writeup! Finally someone did it! We’ve been looking at the SuperMicro vs the LSI JBOD shelves.

Looking at a similar solution, do you have any performance data on this build i.e. Raw IO throughput, IOps. Done any bonnie++ or copy from RAM to a partition over iSCSI (particularly for VM performance)

We’ve been having a few problems with the LSI 9240-8i around drivers on both Opensolaris svn_134 and nexenta 3.0.0 beta 3, with the cards running in JBOD mode as well as dismal performance although this might not have been the cards and more the Western Digital 2TB green drives that where giving us problems any advise, or pointers would be appreciated.

Our LSI 9240-8i cards just died on us 2 days ago and had to replace with adaptec card with jbod support (asr 5805) and that works 1000% better. These LSI cards arent great, we have had 3 of these cards give us a lot of issues. We cannot see the cards in Solaris anymore, even though the PCI ID is detected, the drivers just refuse to attach, even after reinstalling solaris and/or nexenta. Tearing our hair out here. We managed to recover data by plugging in to a motherboard with just enough SATA ports to get the array running.

Typically the cards (LSI 9240-8i) ran 100% for 1-2 weeks and then just bombed out. No coming back. Still no word from anyone on why.

We are also now running the iSCSI Comstar with WCD=TRUE This reduces speed but ensures data integrity over the writes on any power outage etc. So it ensure consistency of data. The write speed is also more consistent and less ‘bursty’ as some people will notice.

Local benchmarks: haven’t really done many yet; did run a couple bonnie runs, but didn’t record the results. If you have any quick suggestions for local benchmarks, would be happy to run them for you.

iSCSI: Have done quite a few Bonnie++-over-iSCSI runs, but again haven’t actually recorded the results, sigh! :) We’re limited to the 2xGE ports for that, and I do see both ports maxing out. I’ve tested both wcd=true and =false; with wcd=true, I can still max out both GigE ports.. but of course it’ll be limited by the speed of the X25-E mirror for ZIL (at least from what I can see in the ‘zpool iostat -v’ output.) If we do go this route for storage nodes, we plan on doing at least a pair of mirrored X25-E’s.. may go with 3 mirrored pairs to allow for tons of throughput.

Sorry to hear that you’ve had such poor luck with the LSI cards.. they have been rock solid for us so far. I wonder if it may be a difference between internal and external connectors or something along those lines. Surprising to hear that the Adaptec 5805’s are running better for you – I had heard previously about performance issues with those cards under OpenSolaris, even in JBOD mode. (They have been great for me on Linux nodes, and are my preferred RAID controller there.) Of course, if they are just being used as JBOD controllers, that makes them really, really expensive for this purpose. ;)

We had a ZIL fail last night (Intel X25-E) Were running production with +-60VM’s running off it and it took the whole box down. Rebooted and it came back and reimported the pools etc. Its just been such a headache that were considering putting this solution on to our NetApp rather.

Btw, David and I are on the same project.

I am just not sure/convinced of the the stability. The LSI cards are much more affordable, but they just break, well the 9240’s at least. Now with this ZIL failure (after we swopped out another 2 of the Intel X25-E’s the night before as well for a similar problem)

Any ideas on how to get this working better or to be more stable?

To speed test, we are usually testing a VM mounted over iSCSI but from memory. We load up the shared memory in the VM.

dd if=/dev/urandom of=/dev/shm bs=8k count=100k

Then we write it out:

dd if=/dev/shm of=/root/testdata bs=8k

Typical performance with WCD=FALSE is about 6.5MB/s

Setup is:

Xeon 3400

16GB RAM

Adaptec 5805 Card in JBOD

2 x Intel X25E 32GB ZIL

1 x Super Talent 128GB READ Cache

8 x 1TB Seagate Hard drives(4 raid pairs)

2 x OS disks

Yikes! That is really scary. ;(

Are all your 9240’s out of the same lot? I wonder if you may have just gotten a bad batch of cards.. I don’t have any cards with the external connectors, but we have 3 9211-8i’s that have been in production for a few months with no issues (OpenSolaris boxes – snv_124, snv_133, and Nexenta 3.0.0beta – NexentaOS_134a.) I also have the 4 9211-8i’s in the new server that we’re testing without any problems. Have you checked with your local LSI distributor to see if they have seen that behavior?

When the ZIL failed and caused a reboot – was it a X25-E in a mirrored ZIL, or a single drive as a ZIL? I wouldn’t expect either case to cause a reboot, but who knows. When working with Sun on the issues we had with the 7210’s, it really sounded like their default action if something unexpected happened with the storage was to reboot.. so I guess that could extend to OpenSolaris/NexentaStor too.

Here’s results of the benchmark you mentioned above.. this is on a XenServer VM, with an iSCSI lun to the storage machine described in this article..

The creation of the file is slow (as expected – coming from urandom), writing the file back to disk is nice and quick – about the speed of a GigE link, which is what I’d expect.. this is with a zvol with write cache disabled.. here is the ‘stmfadm list-lu -v’ for the LUN:

Hi. Lsi seem to be a bit slow to the party, great initial support and now they are gone, think we scared them off with this problem!

After even more tresting, We suspect it’s an Opensolaris & Nexenta driver bug. The cards work fine in windows.

We had an intel Zil completely lockup. Box froze immediately, taking everything with it. Dead.

Not sure what to do now as we stuck with 3 LSI 9240’s that aren’t working and two intel x25 ssd’s that ‘broke’ so we aren’t sure if we should go for AVS or just use our NetApp until we can stabilize the setup (how I don’t know!)

Oh interesting.. I finally *looked up* the 9240, and see that it’s actually a MegaRAID controller, which uses the ‘mr_sas’ driver.. which is apparently distinctly different from the ‘mpt_sas’ driver? Curious – have you tried a real mpt_sas card?

I’d think you should be able to RMA the Intel drives at least?

I can’t speak for the 9240, but on other LSI cards you can use either the “IT” (JBOD, mpt_sas driver) firmware or the “IR” (RAID, and I belive mr_sas driver) firmware.

Since you have the E5620 CPU’s with AES-NI instructions, what are the chances of you running some benchmarks on dm-crypt in linux with aes-ni in use? I’d love to see if the new aes-ni allows >200MB/sec reads/writes with RAID configs!

Using something like the script here?

http://www.holtznet.de/luks/

Any idea what kernels support the AES-NI instructions? If I could do a LiveCD and run the benchmark against a ramdisk, should be doable..

Hi Paul,

Just thought I’d chime in here on the AES-NI performance. Under Linux 2.6.33 on an i5 661 through dm-crypt (AES-CBC-ESSIV) I saw 670MB/sec sequential read throughput. Native sequential read of the array was 750MB/sec. On slower devices I saw virtually no discrepancy in speed between the dm-crypt device and the native device.

Keep in mind, that was one thread on one core. Developers are working on parallelizing dm-crypt, but currently that is in a very experimental state and basically not working at all. Hopefully in a little bit we’ll see that improve.

Excellent, thanks Matt! I actually just ordered up a few more 5620’s, and was going to try this out, but you beat me to the punch. ;)

Sweet! I am able to get 1GB/sec on my RAID, but when dm-crypt is going it’s at 100MB/sec. I am ordering an E5620 with AES-NI (already compiled my kernel for it) to combat this. It will be AMAZING once aes-ni AND multi-threading is active on dm-crypt.

Thanks!

Can you comment about the Supermicro SES2 enclosure management support with OpenSolaris/Nexenta? Did you get the harddisk failure LEDs of the Supermicro backplanes working?

Also could you check the SCSI infos.. is there a separate SES2 SCSI device visible through each SFF-8087 (iPass x4) connector?

Thanks! Great article!

I didn’t test the drive failure LEDs.. any recommendations on how to do that would be appreciated, I’m still a bit of a Solaris noob. ;) I can test it next time someone is down at the data center. Is the Drive ID light the same type of test?

Also not quite sure how to find out if it sees the SES2 enclosure.. here’s the results of a ‘cfgadm -al’, excluding the USB devices; I’m *guessing* some of the ‘unconfigured’ ‘scsi-sas’ devices could be the SES, but not really sure? The ‘disk-path’ devices add up to 23, which is the number of drives in the machine.

Replying to myself.. ;)

I found the ‘sas2ircu’ command, and am able to see Enclosure details with it, an example from the first controller, excluding the disks..

(skipping disks)

Each controller returns a different ‘Enclosure information’ entry.. not sure if it’s the card emulating it, or if it’s receiving the information via SES2. The ‘Enclosure#’ displays as 1 for all of ’em..

I’d like to contribute my experience with the sas2ircu command.

I downloaded it from the Supermicro website and the version was 5.00.00.00 (2010.02.09).

The locate command wasn’t working. My controller’s firmware was 2.xx.xx.xx so I updated it to 5.00.13.00 and the BIOS to 7.05.01.00. It’s working fine now.

I’m not surprised. We’ve had these problems with the MegaRAID controllers (MegaCli v4 will sometimes fail with a firmware 9.x).

BTW, I had to use Windows (ugh!) to update the firmware as I couldn’t find a sas2flash command for Solaris. LSI is currently researching this for me (their support staff is awesome).

Hmm.. I think already “cfgadm -la” should have listed some ses (scsi enclosure) devices..

– try and paste: “cfgadm -lav”

– Do you have /dev/es/ses? devices? try: “/usr/lib/scsi/sestopo”

Also “sdparm” should be able to communicate with the SES devices.

First step is to get the SES stuff working, after that you need to run a daemon that actually turns on the LEDs in the case of disk failures.

Oh, please also try running “fmtopo”.

See here for example output: http://defect.opensolaris.org/bz/show_bug.cgi?id=12314 and http://blogs.sun.com/eschrock/entry/external_storage_enclosures_in_solaris

Full output of ‘cfgadm -lav’ is available at pastebin.

Did a ‘find’ across /dev/ for anything with ‘ses’ in it.. nothing. ;(

Not seeing a ‘sestopo’ anywhere on the Nexenta node.. fmtopo just returns the physical devices in the machine; does not enumerate the disks at all.

I will have to try an OpenSolaris LiveCD to see if this may be something Nexenta-related.. and make sure that the backplanes are jumpered for SES2!

So yah, you talk a lot about SAS then use SATA disks – if you are going to multi-head this and use multiple paths, won’t you require the SAS disks to do that, or add in an SAS interposer between the backplane and the drive to facilitate the full SCSI multi-path structure?

I’m still working on my stuff that I’ll post in a bit for my multi-head, multi-path, SAS layout for our VMware infrastructure.

At this point, we’re just looking at doing regular single-path SATA to the disks, and using replication for redundancy.

It’d be great to hear how your multi-shelf multi-head build goes.. I’ll need to dig into the new version of the HA plugin, looks interesting! (You are going NexentaStor with the HA plugin I assume?)

Here’s my reason– splitting drives across controllers. We went with an SC846 case, and my original plan was to use four controllers, each handling six drives so when I created three eight-disk RAID-Z2 vdevs each controller was running two drives of each vdev and even after a controller failure each vdev would survive (albeit degraded and with no remaining redundancy). But we bought the A version (my error), and so there was no way to control six drives from one controller– the choices were four drives or eight drives. Next time I’ll probably get the TQ version (though I’ll admit cable routing is a lot easier this way).

Makes good sense – thanks! Seems like multipath would be a good way to get around that particular issue.. but right now (at least as I understand it) you have to trade off total bandwidth go get multipath.

I can’t imagine having to try to cable 36 individual drives in the 847 cases.. yowza! :) I’ve done 24 in an ancient case I have at home, and it was a huge pain.. of course SuperMicro does a big better job at providing tiedown points for cable management, etc.

That’s my understanding as well, and I wanted bandwidth more.

That’s what I have a minion for. :^)

Can you confirm the SC847A-E1’s backplane only uses 2 SAS ports in the 8087 cable ?

I was under the impression the cable supported 4 ports and so the backplane. I’m using a LSI 9211-4i controller.

What I’m trying to discover is if I’m using half the capacity of the LSI controller with that backplane. I’ve contacted SuperMicro about their new backplane that uses the LSISAS2X36 chipset which support 36 ports.. but I’m still not sure how many ports the backplane makes available.

I don’t have an E1.. but it should have a single SFF-8087 connector back to the motherboard, of which all four channels will be used. Were you reading my mention about only needing two SFF8087 connections for the 847E1? If so, what I meant by that is you will need one 4-channel SFF-8087 cable for the front backplane, and one for the back.

Hope this helps?

Helped a lot, thanks!

I’m testing Promise VTrak J610sd JBOD with Seagate 1TB SAS disks on NexentaStor 3.x (snv 134e) and did ‘dd if=/dev/zero of=/dev/rdsk/c5XXXXXXd0s0 bs=128k count=80000’ to check a the basic throughput and found that with multipath (two SAS cables connected) ebanled I got 14MB/s and if I unplug one SAS cable I got 115 MB/s.

The card is LSI 3081e

# raidctl -l 6

Controller Type Version

—————————————————————-

c6 LSI_1068E 1.26.00.00

http://www.promise.com/storage/raid_series.aspx?region=en-US&m=578&sub_m=sub_m_2&rsn1=1&rsn3=2

Does anyone saw similar performance difference with multipath and without?

I’m afraid I’m not the person to ask about this.. ;( Perhaps try Nexenta support or the OpenSolaris storage-discuss mailing list?

Sorry I can’t be more help – I don’t have any multipath arrays on osol machines yet..

Outstanding job, thanks for the large photos!…

Could you give us an idea on the relative noise of this beast? Like is it louder than Dell desktop, but less than a Netapp? I know these are useless stats, but I wanta know if it sounds like a Jet or not. The new HP blades are *amazingly* silent even with large disk load, like less than a Dell! Some SuperMicros are dead quite, too, like I’m told their PWS-1K41P-1R-SQ sold in 747TG-R1400B-SQ, but I don’t know.

Oh, not useless at all! A stat I failed to mention, so thanks for asking!

This system is loud, but not Netapp or (old from your comment?) HP c-Class enclosure loud. ;) We have some foot traffic through the room where it was being built (our company has a weight loss competition, and since this room is fairly private, the scale is in there), and people commented on the noise, but normal conversation can still be heard.

I did not built for quiet – one thing that would help is to ensure your motherboard has enough fan ports for all seven fans up front, and then buy some fan extension cables to be able to reach the ports that aren’t at the front of the board. The board I purchased does have plenty of fan connectors, but only five up front – and in this build I didn’t care about noise (it’s going in a data center that is crazy loud already), so I didn’t bother with the extensions. With that, you’d be able to quiet those fans down unless needed for heat using the fan control features in the BIOS. In mine, two fans are connected to the backplane, and they run pretty fast all the time.

The power supplies are also loud – someone in a thread over at Hard Forum mentioned the same power supply that you did.. the part number is almost identical, so I would *hope* that it would work.. cooling could be a concern though? In any case, if that power supply would work and keep it cool, and you connected all 7 fans to the motherboard, I’d expect it to be much quieter – until you start cranking the drives to full-bore, generating heat, and needing it to be cool – then prepare for noise. ;)

Has anyone been able to get the disk serial number from OpenSolaris ?

The sas2ircu command shows the serial but ‘iostat -En’ is empty:

Vendor: ATA Product: ST31000340NS Revision: SN06 Serial No:

With the serial, I would be able to know which slot it refers to.

Here is a not-so-pretty way of mapping the device to the physical slot:

# format -e

> (choose disk)

> scsi

> inquiry (get the serial for here)

# sas2ircu 0 display | grep -A6 | head -1 | awk ‘{ print $4 }’

I’m going through the code to understand why iostat doesn’t show the serial since it’s seem to be available in the INQUIRY return. Perhaps it’s not in the same format that it expects.. that would explain why the device id (cXtXdX) doesn’t match the hex chars (seem to be generated based on hostid+timestamp).

And here’s the pretty way of doing it:

# ls -lart /dev/dsk/c5t5000C5001A3734C3d0s2

lrwxrwxrwx 1 root root 48 2010-05-17 09:27 /dev/dsk/c5t5000C5001A3734C3d0s2 -> ../../devices/scsi_vhci/disk@g5000c5001a3734c3:c

# prtconf -v /devices/scsi_vhci/disk@g5000c5001a3734c3 | grep -A1 serial

name=’inquiry-serial-no’ type=string items=1 dev=none

value=’9QJ6EAZV’

http://mail.opensolaris.org/pipermail/zfs-discuss/2010-May/041498.html

Also try grepping the same way for “product” to get the model. Very useful. Thanks for posting.

great review… Kudos for you.

I just ordered a SC847A-R1400LPB and a X8DTH-6F … so our systems are looking mighty similar ;)

Thanks for a great post, now I know what to expect when the parts started to appear at my door step!

A question on your SSD choice, it there a reason why you use 2 different type of SSD instead of just one ?

1. Intel X25-E Extreme 32GB 2.5″ SSD

2. Crucial C300 128G 2.5″ SSD

Can’t you use either Intel or Crucial to do it all ?

Sorry for my late reply; it’s been a crazy few days! Let us know how your build goes. ;)

I used two different types because of the different purposes..

1) The drives for zil/slog should ideally be very fast, and not degrade in performance.. because of the issues that MLC drives still generally have with degrading performance, I went with the X25-E’s – they are SLC-based.

2) For the read cache (l2arc), write speed isn’t as important as read speed and raw space – so in that case, it was far less expensive to buy RealSSD’s. Write performance may degrade over time, but if it does, I can also easily remove the drive from service, run Crucial’s utilities on it to restore performance, and put the drive back online.

It’ll be really interesting to see how this landscape changes as the ‘enterprise MLC’ drives come out..

Thank you so much for this review. It is incredibly useful. You really capture the strength of SAS in a clear and practical overview.

Regarding this:

Is there no way of obtaining this result with the E2 or E26 boards? E.g. by using the dual SAS ports per plane to have fewer drives per port, instead of using them as redundant ports? In a way “stripe RAID-ing” the ports, instead of “mirror-RAIDing” them.

… and then hopefully being able to rely on good failover behavior when something related to either port fails – that is, falling back to shunting the entire data flow over the single remaining port.

I don’t know exactly what SAS can do :)

Well…as Nate knows I have been struggling with this setup(same MOBO and chassis) trying to get the on-board IPMI to stay active for longer than anywhere from 1-4 hours without failing(when it would fail I could not ping the dedicated IPMI address anymore). I am happy to report I finally resolved this and of course it comes down to user error :)

I had in installed Nexenta trial edition and it would always cause IPMI to stop working. I built my zfs disk pool and plopped a couple VM images down on it and they ran fine . No errors on the Solaris/Nexenta OS side that I could find.

Well ,then I would install Opensolaris and CentOS and those would always run fine without IPMI failing so this pointed me to to something software related or something Nexenta was running that would stop IPMI. Both Nexenta and SuperMicro were fielding my questions and giving me great support(I was talking to the head of engineering for IPMI development at SuperMicro and I had not even purchased Nexenta support yet at the time) . But in the end I was still left scratching my head as we could not find a cause.

Well the other day I was running the LSI sas2ircu utility (Nate posted on this) and I had noticed that on one of the controllers all the disks where showing up in slot 255 (the utility can see up to 255 slots in a compatible chassis I guess). Well that made me look more closely at the controller and the cabling which I had already double checked.

Well, it turns out that I did not have one of the cable pushed in all the way on the rear backplane. I mean we are talking probably less than half a millimeter here. :) Apparently it was pushed in enough for the disks to bee seen, but I am guessing the sgpio or whatever signaling was not working. Those sas connectors on that backplane are hard to get at. Once I re-cabled that connector up again , I ran the sas2ircu utility again, sure enough the disk slots showed up correctly.

I fired the box back up and now I have a working IPMI solution.

I just thought I would post and maybe save somebody quite a lot of time diagnosing this. So always triple check your cabling if you are having an issue. :)

-Scott

Oh my gosh! What a weird scenario.

It boggles my mind trying to think of how the two could be related.. but so glad it’s finally resolved! :)

You talk about ‘Port Multiplier’ in your review.

A ‘Port Multiplier’ is a SATA-specific device, and mostly ‘dumb’ and has some reliability issues when a single drive gives issues.

The device on the E1 and E2 backplanes is a SAS Expander.

Also, multipath works fine on my E1 backplane connected to LSI 3081 and LSI 9211-8i on RHEL5.

The E2 is to provide expander-redundancy, but it does require dual-ported SAS HDDs which are more expensive.

Indeed – 100% accurate. Thanks for the clarification; I have a nasty tendency to apply terms generically where they should not be!

Now that the E26 chassis is available, I am really tempted to start doing new builds based on that chassis with multiple HBAs – the redundancy would rock, and with dual 6GB/s multi-lane connections to each backplane, there isn’t a large risk of over-subscription like there can be with the 3GB/s models (especially when it comes to SSDs that can consume an entire 3GB/s lane!)

The biggest issue I’m seeing so far is I’m not finding anyone who has figured out a “clean” way to get an interposer in the path for SSDs.. SuperMicro has a SAS2 interposer available now (part number AOC-SMP-LSISS9252); however, I have not yet been able to find any documentation on how this interposer would be mounted between the drive and the backplane.. there doesn’t seem to be enough room on SuperMicro’s 2.5″ to 3.5″ drive sleds. It’s probably more than possible to “frankenstein” the device in by taking a standard drive bracket, using a 2.5″ to 3.5″ adapter, and drilling some holes.. but what a hassle! If anyone has information on how this interposer can be mounted, I’d love to hear it!

Of course, SAS2 SSD’s would be nicer, but they are, well, expensive. ;)

Thanks for reading the article, and for the clarification!

I’m trying to figure out the same thing… two years later hah. The only idea I’ve come up with that might work is getting an Icy Dock ssd converter and finding a Supermicro drive tray that will allow an interposer on it.

FYI, I have heard that the drive sleds included with the Super Storage Bridge Bay systems include a drive bay that can support the interposer.. I haven’t found a way to order them individually yet, or gotten confirmation if they are compatible with standard cases.

Yeah I’ve actually seen that too. If you google the part number, MCP-220-93703-0B, you should get a couple stores selling them individually. But looking at the pictures of the Storage Bridge Bay, the trays look different then the ones on our SC847 JBOD, so I’m not sure if they will fit.

Nice post. Lots of excellent information.

I do have a quick question for you. Now that the E26 chassis is available, would you still use the 9211-8i cards? If so, how many would you buy? As far as i can tell, you’d only need one. I can’t really find any information as to how the backplane on the E26 chassis divides up the drives into channels.

Great article!

What is community experience with scrubbing / resilvering of such data volumes? We have 11-drives RAIDZ2 vdevs, and a few of them in one volume. Drives are 1-2TB 7200kRPM SAS drives. And we experience huge performance degradation during these operations. We are running snv_111b release.

Have you looked at using a more recent OpenSolaris rev? I haven’t noticed severe performance lag, but then again, we also have enough SSD cache that most of the reads seem to hit the cache..

Nice article, gave me some ideas but also some questions.

I’ve got a HP DL120G6 with dual core idle in the server room.

I’ve also got some 2GB harddisks and a few x25M’s laying around.

So basically I’ve got the ingredients to give this a try.

The goal would be to create NFS server for backing up nightly VMWare snapshots.

my questions:

Aren’t you worried about wearing out you SSD cache drive?

Does solaris/nexenta support trim?

Does the dedup/compression feature require a lot of cpu power and memory?

Where do I install the O.S. Do I need a separate drive for this or can this also be on the zfs raid?

I solaris any good for NFS when comparing to linux?

Sorry to bring up such an old topic, but does anyone have any idea whether the new E16 version requires the use of Sata2 drives for throughput to be 6Gbps per channel? Or would I still be able to use Sata1 3Gbps drives and the backplane multiplies will still give me the benefit of 6Gbps per six drives?

Thanks

I stumbled on to this blog after a google search and I have been impressed with your work. The E26 chassis is out and I wanted to ask that what would you have done differently based on your experience with this rig? Also do you rate this as enterprise class? I am planning to build one with NexentaStor + HA plugin for our critical data with high IOPS requirements. Any words of advice?

Cheers

Zaeem

Hi there,

Thanks for an interesting post. I’ve also looked at this as a ZFS storage option but I was put off the E-series chassis after seeing reports of problems with SAS expanders and SATA disks. See here for example:

http://gdamore.blogspot.com/2010/12/update-on-sata-expanders.html

Have you had any related problems?

I was also put off going to the 6Gbps SAS2008 because it reports the disk WWN, resulting in the very long ‘t’ numbers. I presume the main nuisance is being able to map disk WWN to chassis slot number, and also when you replace a failed disk do you have to find the different ID of the replacement disk to be able to run zfs replace?

I was therefore considering using the older SAS3081E-R cards, but you’ve mentioned a bug with them. unfortunately bugs.opensolaris.org now seems to have died (thanks Oracle!). Do you know any other link for details of this bug and its status?

“….‘TQ’ style – not available for the SC847 cases, but in the SC846 chassis an example part number would be ‘SC846TQ‘. This backplane provides an individual SATA connector for each drive — in other words, you will need 24 SATA cables, and 24 SATA ports to connect them to. This will be a bit of a mess cable-wise.. with the SFF-8087 option, I don’t know why anyone would still be interested in this – if you have a reason, please comment!…”

Well, SAS expanders + SATA disks can give problems. In that case SATA cables might be better, maybe?

http://gdamore.blogspot.com/2010/12/update-on-sata-expanders.html

This is interesting. I just got ahold of a Supermicro chassis (36-drive) but I’m having trouble figuring out what it is. The backplane has 6 of the SAS connectors on the backplane near the front of the chassis. So you don’t run individual cables to each drive. I think maybe this makes it an EL2 chassis? Using an Areca RAID card I can’t get any SATA drives in the drive bays to be recognized. But if I connect the drives directly to the Areca card (hanging out of the case, not using the drive bays) then they are recognized. Any ideas?

Chris, I am having the same problem on a new build I am working on. I am running the 846el26 chassis and an areca 1882i raid card. The SAS drives fire up just fine but no luck on sata drives. I was wondering if you ever figured anything out on this. Any help will be greatly appreciated. Thank you.

I got this one figured out with some help from Areca and Supermicro tech support (both were very helpful, timely and professional). It basically comes down to this. If you want to mix SAS and SATA then you cannot use the second expander on the back plane. So you only plug the raid card in to PRI_J0 and do not connect SEC_J0. Also, if anyone is wondering, this recognized a Seagate SATA drive, a WD Caviar Black SATA (without using the TLER utility) and a WD RE4 drive. I was just testing random drives and happened to have those in when I finally found the solution. Please note that I did not test the reliability of any of those, just that they showed up and allowed me to create a RAID set and then I removed them. I hope this information helps someone.

Also, thank you for this great post, it was very helpful when planning my build.

Very interesting, thanks for the update! I suppose that makes sense – when you connect to both ports (to allow multipath), the backplane probably flips to SAS-only mode, since SATA drives don’t support that. I had never realized that though – my assumption was that SATA drives would just be addressed over the first path.

There are SAS<->SATA interposers that you can use to allow SATA drives to run with full multipath capabilities on the SAS bus (discussed here – http://www.supermicro.com/support/faqs/faq.cfm?faq=11347); I have always wondered how to install the drive with the interposer in front of it, and that FAQ seems to answer it.. however, it may be specific to the drive trays included with the SBB systems too. Looking on the SBB page (http://www.supermicro.com/products/system/3U/6036/SYS-6036ST-6LR.cfm), the P/N for the drive sled is ‘MCP-220-93703-0B’, and the description is ‘Support SATA/SAS HDD tray with LSI converter bkt’, so yup, sounds like a special tray.. I will have to dig into that though.

Thanks for the info – very good to know!

I don’t see any interposers working with this case setup so I think I am out of luck there. Do you happen to know if every sas2 drive supports multipath? I am looking at some Toshiba 7200 RMP SAS2 drives but nothing specifically calls out if they support this configuration ( http://storage.toshiba.com/docs/services-support-documents/compatibilityguide_mkx001trkb.pdf?sfvrsn=0 ). Thank you for any thoughts.

I asked Mike@IPhouse on his blog so i will ask you the same.

Any thoughts on this build a year-ish later? How is the reliability/stability? How is the supermicro stuff holding up? anything you would have done different? (i am considering something similar and interested in how yours ended up panning out)

Appreciate any thoughts.

Still running pretty well!

Things I would have done differently..

Really, that’s about it. Quite happy with the solution overall.. just took a little bit to figure everything out, and to realize that dedupe should be *off*! Also – we did have some problems with support at Nexenta in the early days, but we were always able to escalate and get the issues addressed. However, this appears to have been growing pains.. things are far better now, including a real support portal where all registered geeks in the org can view and update cases together, etc.

Excellent, nice to hear indeed! and thanks for passing along the feedback!

I will definitely take your suggestions into consideration and make some sort of blog post of my own to document my build assuming i go this route.

are you still using the onboard 1gb NIC’s to connect to your switch? are you using LACP? what kind of switch did you end up going with?

im assuming we will be adding a dual 10gbe adapter to our build (its to house datastores for ESX) but curious to know what others did.

Oh, whoops. :) I forgot that this was for our very first node buildout, and does not reflect what we actually ended up doing for our full-scale production nodes.

Differences in our real build from the above:

* We went with 48G of memory instead of 24G.

* We went with 1tb/2tb 7200rpm SAS2 drives instead of SATA.

* We went with 2x 300gb 15K SAS2 drives for the boot disks instead of SATA.

* We went with 2x 128GB RealSSD C300’s for the L2ARC (read cache), and 6x 32GB Intel SLC SSDs in three mirrors for the ZIL.

* We added 2x Intel quad-port gigabit ethernet cards to the build, for a total of 10 GigE ports.

For networking, we are terminating these into our Cisco 6509 switches.. they have a SUP720-10G, but have limited 10GB ports – so we went dual port channels of 4-ports each.

I should note that if I were doing this today, I wouldn’t go with the Intel SSDs anymore – find a SLC SSD with a 6gbit interface. The Micron 50gb P300 drives *rock*, and are somewhere around $600/ea.

Finally got all my orders in for all the gear (finding hard drives was a pain, but im sure everyone is having that prob now a days) posted a sanity check in regards to using Nexenta as primary storage, feel free to check it out and weigh in if you have any opinions on the topic, attempting to spur discussion there, lol.

http://nexentastor.org/boards/1/topics/4400

Just thought I’d let you know that with the SuperMicro backplanes dual uplink is supported. So with the SC847E1(6) you’d effectively have 2 x mini-SAS connectors for 8 lanes giving double the bandwidth you thought you had. Also, be aware that the chassis uses expanders not port multipliers. Hope that helps in future use :)

What do you think of the new Intel 311 SSD 20GB as a ZIL device?

It seems to be pretty similar to the outgoing X25-E with about half the cost per GB and a smaller size (which is helpful as ZIL drives only need to be small and therefore large ones are a monetary waste).

The only thing it lacks is a supercapacitor, but that shouldn’t be a problem if you have dual PSU’s connected to dual UPS’s connected to separate circuits from separate power companies that have separate power line & power station inputs ;)

How have you found the Intel X25-Es in your setup? There’s been a lot of talk about using SATA SSDs with interposer cards and the possibility for interrupt and scsi reset choking…have you had any issues with them?

We’ve had a few die, but they die hard, not start throwing errors.. to be expected for the amount of I/O being slammed against these.

I have not used them with interposers, but I’ve heard similar things for any sata device on an interposer.. that it just causes problems in the long run. ;( I can’t really comment specifically though..

Ah OK. Were you able to RMA them?

It’s unfortunate that there is such a price-premium between SATA & SAS drives, especially in the “post-flood” market.

We’re looking at getting about 5 servers and have had to totally rethink our plans with the cost of 3TB SAS drives going from 11.6c/GB to 30+c/GB.

In the end we’ve decided to buy a server with a crapload of Seagate GoFlex 3TB drives (and rip out the Barracuda XT from inside) and make do with that, initially, until there is some more price stability. We’ll relegate that to “ZFS Backup” utility when we can afford to get the SAS drives for our main storage server pair (now delayed in purchasing).

“….‘TQ’ style – not available for the SC847 cases, but in the SC846 chassis an example part number would be ‘SC846TQ‘. This backplane provides an individual SATA connector for each drive — in other words, you will need 24 SATA cables, and 24 SATA ports to connect them to. This will be a bit of a mess cable-wise.. with the SFF-8087 option, I don’t know why anyone would still be interested in this – if you have a reason, please comment!…â€

I will use a similar case with ASUS P8B-C/SAS/4L motherboard.

It have 8 SAS 6Gb/s, 2 SATA 6Gb/s, 4 SATA 3Gb/s ports, but only SATA connectors (total 14 SATA connectors!).

Can this motherboard be used with “A” case? I know – exist SFF8087 to 4xSATA cables, but I’m not sure – can this cable to be used here?

Just wanted to drop a big thank you to you for this article we recently did a VMware lab storage server build out and we referenced this post a lot in our planning for this build out. We ended up with some pretty good performance on this chassis although we didn’t end up using ZIL drives or L2ARC drives. Since our server is primarily for a lab environment without strict performance requirements we wanted to determine what we could accomplish with just parts we had laying around from other devices (only thing we actually ordered was the chassis and an Intel Quad Port ET2 NIC). Our numbers on box were:

Initial write of 500mb on 5 threads (total of 5 500mb files)

Initial Write: 1,292.01 MB/s

Re-Write: 1,308.76 MB/s

Read: 3,560.75 MB/s

Re-Read: 3,655.25 MB/s

Reverse Read: 3,136.23 MB/s

Stride Read: 2,804.91 MB/s

Random Read: 2,499.73 MB/s

Mixed workload: 1,981.39 MB/s

Random Write: 369.06 MB/s

Fwrite: 1,248.06

Fread: 3,365.72

We used the following parts in our build:

1x Intel S5520HC Server board with two 5620 CPUs and 24GB of memory

1x Supermicro SC847A-1400LPB Storage Chassis

4x Adaptec 5805Z RAID Controllers with ZMM super caps

1x Intel Quad Port ET2 Gigabit NIC

36x Western Digital RE4 500GB drives (Storage drives)

2x Hitachi 2.5″ SATA HDDs (for running our OS in a mirrored array)

8x Tripplite SFF-8087 to SFF-8087 cables (We didn’t have enough controller ports to connect all 9 backplane connectors to RAID controllers)

OS: Sun Solaris 11 Express

One thing we attempted (and failed at) was to see if the 4 additional onboard (motherboard) SATA ports would detect and run the last 4 drives on the rear backplane. We only have 5 PCIe slots on our motherboard and we needed one for the NIC. Since the only low profile SAS/SATA cards I could find only had a max of 2 SFF-8087 ports on them we knew we would only be able to use a max of 32 drives via RAID controllers. I was hoping the onboard SATA ports (via a reverse breakout cable) would run the final 4 drives for a pair of ZIL drives and a pair of L2ARC drives so we tried initially to get 4 standard SATA WD RE4 drives to work but the onboard controllers wouldn’t detect any of the drives in that backplane. Not a huge deal as those 4 drives aren’t going to break the bank on capacity (we ended up with 12 TB). Adaptec said they are coming out with a low profile card that has up to 6 ports on it and when they finally do I’ll switch to that so I can use all the drive bays. As of right now I’m not seeing a need for a ZIL or L2ARC drive setup unless I’m missing something.

I hope to help me

Where can I get like this

Minimum System Configuration required

(Procured by customer)

CPU

Intel Core i7 975 3.20 GHz processor or better

Motherboard

Supermicro motherboard X8SAX

RAM

DDR III – 3GB X 3 units

Hard Disk Drives

Minimum 1 TB Hard Disk

Optical Drive

DVD Writer – SATA lg 24x

Operating System

Windows 7 Ultimate license

THANK YOU….

This really doesn’t have much to building consumer PCs.. but NewEgg will sell all of those parts. If you want it preassembled, just search for an authorized SuperMicro VAR.

We’re tackling a project very similar to the one you have here; your own parts list played a major factor in deciding which parts we might need to use. Here’s the rub: how in the heck are the drives all supposed to be wired? The wiring diagrams that came with the SC847E26-R1400LPB are not clear enough. Do you have a pic of your wiring that we can compare to? I’m assuming it’s too much to ask if you have a wiring diagram already drawn up…. :-)

Thanks!

I’ve got a technical question regarding your article on the Supermicro SC847 chassis.

I have purchased the newest 847-E26 JBOD chassis (45 drives, 24 front, 21 back), and it’s got the multipath support for each expander.

Cutting to the chase/question… will regular SATA (not near-line SAS) drives work with these expanders? The manual suggests that SAS drives (or near-line SAS) are *required*, and that SATA drives cannot be used… but it’s unclear if they are referring to best practice or if you want to leverage multipath support. Quote from page 97 of the manual below:

“There is also dual version of the same expander which has two expander chips, therefore it is necessary to have two paths to each drive behind the expander. It is mandatory to use SAS drives when using dual expander backplanes. This is because the SAS drives have dual connectivity, therefore enabling the redundancy feature. No SATA drives should be used in dual expander backplanesâ€

In my case, I purchased 45 of the new Hitachi Ultrastar 4TB drives, which are enterprise SATA. They are NOT NL-SAS drives so they do not have a second data port for multipath. If I do not care about multipath and simply want an enormous dumping ground of storage where speed is not important… will these drives work from a pure technical standpoint?

I don’t know the definitive answer.. but my understanding is that it should work, but only one one path. That may not be correct (ie – the firmware on the expander chips may refuse to enable drives that do not support both paths or similar), but hey, give it a shot with a drive? :)

You may have better luck asking SuperMicro or one of their resellers..

I’ve got two 2TB sata drives installed into slot #1 and slot #24 on the front side of this JBOD. I had to use my own personal SFF-8088 cable to go from the Areca to the bottom connector on the back of the Supermicro, and I chose slot # 1 & 24 to make sure the expansion was spanning all slots.

It works fine for me so far with just 2 SATA drives. I need another SFF-8088 to go into the 3rd external port connected to the 21 drive backside expander before this this is cabled up the way I want (don’t want to bridge the two expanders together on the inside… I’d rather use 2 dedicated channels, 1 per backplane to maximize bandwidth).

So far so good… I also happened to get a reply from Supermicro today confirming it should work no problem but I wont have the advantages of the E26 expanders (concurrent channels) if I do not use SAS drives. Although that’s a bummer… there are no 4TB NL-SAS drives on the market now, and our needs are to get as much storage as possible. This will give us 144 TB of storage when all is said and done.

Hi,

Thanks for this post, it really helped me make the purchase for our server.

So we purchased a similar chassis, motherboard and HBA card, but I’m not able to use the “locate” led for drives connected to the HBA card. I can successfully activate the “locate” led for hard drives connected on the motherboards.

I flashed the same firmware and bios from the internal SAS controller to the HBA cards and updated the driver, but nothing is working.

I am using Debian Squeeze as OS and the sas2ircu utility in order to trigger the “locate” led.

Does anyone have any idea of the problem ?

Thanks,

Jonathan

Excellent write up, I was a bit lost as to where to install the MCP-220-84701-0N tray in our 6047R-E1R36N storage array as the manual was a bit cryptic. With this article, I was able to infer that the tray goes underneath the motherboard tray, which, by reading the manual, one would not know is even removable. Thanks!

Have you looked at Solaris croinfo command?

its good for backplane management

here is a link for how I tried it

http://forums.overclockers.com.au/showpost.php?p=14424399&postcount=752

Stanza

Hello,

could someone explain please, what is purpose of using 9x SFF 8087? If I am not mistaken, for example E16 has 2x SFF8087 – each got 4 6Gbps channels, so it means 24Gbps per SFF 8087. So front 24hdd = 24Gbps (1Gbps/hdd which is hdds maximum), and rear 12hdd = 24Gbps which is twice as much hdd can do, so SSDs could be added.

So what is the point of getting A version with 4x two-port LSIs while version E16 could do it simpler?

Thanks

you need a separate link for each HDD unless you has an active backplane.

What do you mean? I have E16 and backplane, where it connects via SFF8087 to the motherboard. HDDs are not directly connected via SATA.

It looks like the article is about A version while E16 did not exist that time, so the solution was getting few LSIs to obtain good throughput instead of 3Gbps per 6 Disks. While with new E16 version it supports already 6Gbps per 6 Disks so its enough throughput per SATA 7200rpm disk.

E16 has an active backplane so you don’t need to use 9x SFF8087.

Hi ,we have a supermicro storage 36 bay like your device but just 16 hdd can online ,when other get in warning alarm be active.

can you help me whats problem?

and

we want to connect to the another storage are you have any manual guide ?

thanks